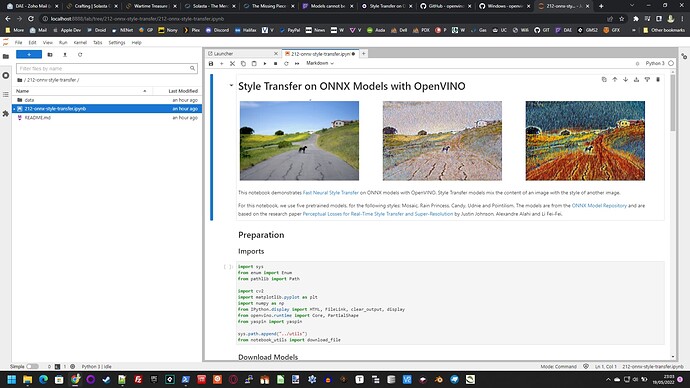

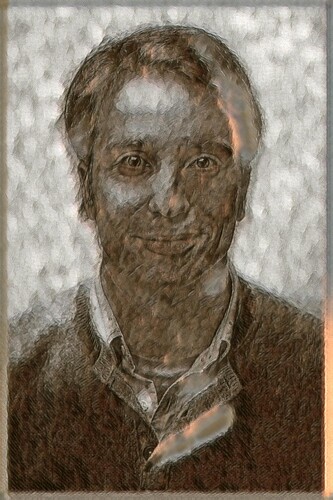

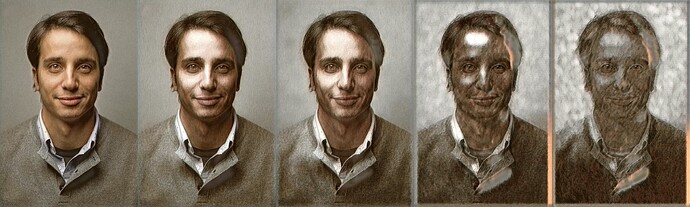

Experimenting can have bad results

Oops - a bit too much style in that one…

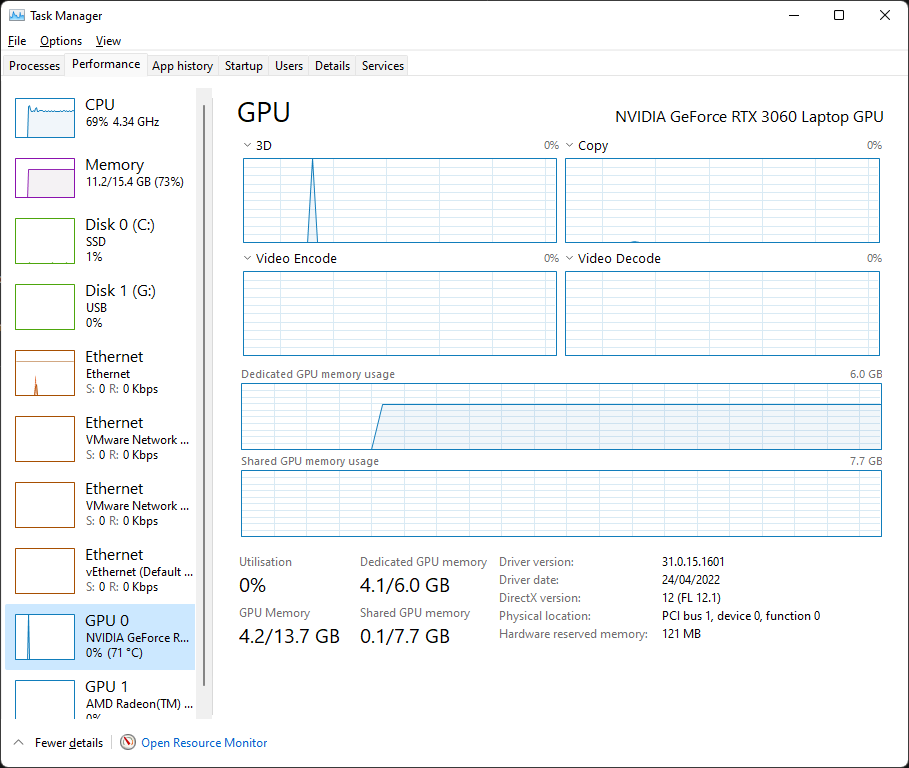

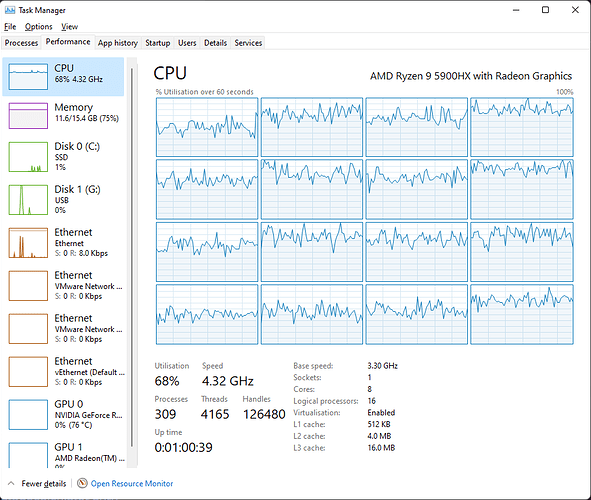

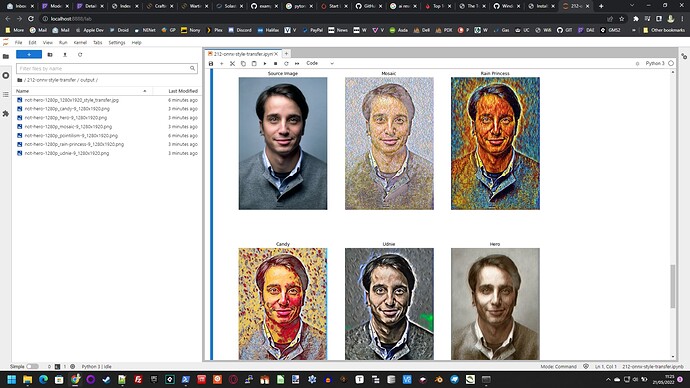

I’ve just started another using a style that’s 256x256 as the one’s I’ve tried so far were way bigger (1k x 1.4k = 20x pixels) - and it looks like that’s not affecting the runtime

If you want some pre-built models to experiment with I’ll stick mine somewhere if you like. ATM they’re in PyTorch format (so need converting to ONNX) - at least that’ll save you the 82 hours training a model. Applying the style is fast - it’s the creation that is slow…

Think I’ll drop DAE a line suggesting ONNX support with the view to letting us create our own Zoo - it’s a win-win situation as I see it

Something else I noticed was that my first attempt looks a lot like it’s just a Sepia tint - I ran a sepia tint over the original and that looks completely different (not nearly as good) so transfer is happening…

[Edit] I’ve scrapped the 256x256 - no speed diffo so bad comparison but I’ll try again once I’e got a nice model so I can see what difference it makes using a smaller style-image (if any) - filesize is currently 6.5M per model… Using coco 2014 for training - two passes - so 165k trains in total. I’ll start next runs overnight (midnight here)