How do I run RTX 3070 with Deep Art Effects GPU version? It seems it does not work, either if I use CUDA 9.0 or CUDA 11.2 following all the instructions.

Which instructions?

https://peardox.com/cuda-cudnn-windows-10/

DAE GPU will only work with Cuda 9.0

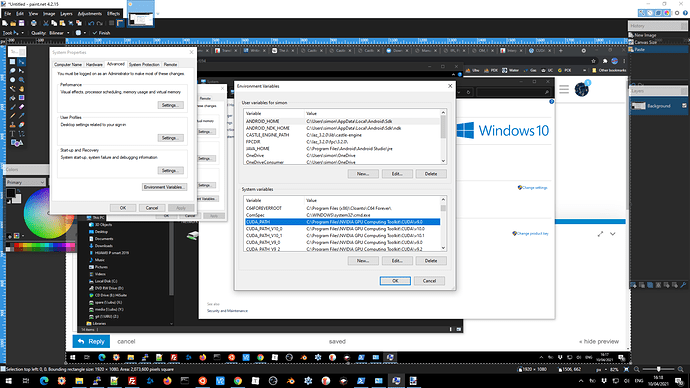

If you’ve got 11.2 installed changing your CUDA_PATH environment variable may help (if not already correct)

In Windows Explorer Right Click on ‘This PC’ and select Properties, then click ‘Advanced System Settings’ in the left menu and finally ‘Environment Variables’ in the Systems Properties window. Now check/alter your CUDA path in the System Variables section on the Environment Properties window.

I will try this, thank you!

Unfortunately, it does not work. It infinitely trying to render, but can’t start it.

Try a smaller image

One thing I do when having problems like this is to work out some rough limits to the image size.

- TestSize = 1024

- Start with an image that’s TestSize x TestSize

- Try rendering it with DAE

- Did it work?

Yes) Make TestSize = TestSize + (TestSize / 2), go to (1)

No) Make TestSize = TestSize - (TestSize / 2), restart DAE, go to (1)

At some point TestSize will be a small enough number to make repeated testing pointless

You can use the same image over and over for testing. Start with a big original and keep on resizing it to TestSize x TestSize and saving that version to test with

The above method is known as a binary chop. You should get to a sensible number in under 10 tries (actually, you’ll most likely get a number a lot faster than that, 10 is the number of times to get it down to 1 pixel accuracy)

Note - the choice of 1024 to start is very deliberate - I already know 1540x1540 will fail if 1024x1024 passes.

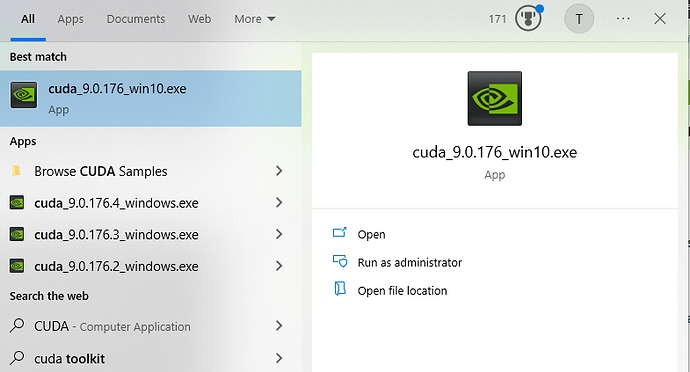

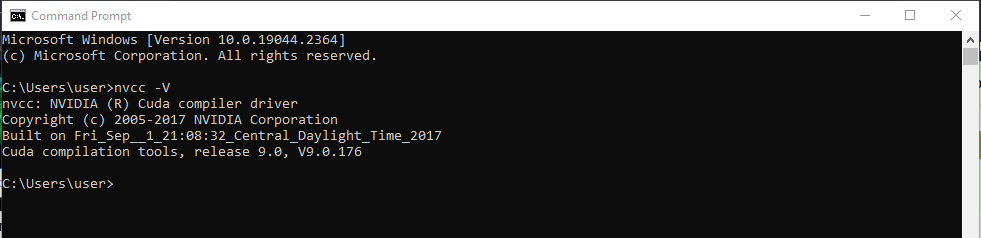

I tried, I believe the reason is that RTX 3070 is not supported by CUDA 9.0 (at least when it was installing it was said that there is no supported GPU found). I tried 400x400px tests, does not work

Oh well, I suppose DAE GPU had to eventually run into this issue.

To be fair it’s equally NVIDIA’s fault for not making CUDA backwards compatible

I guess you’ll just have to take up bit-coin mining on your 3070 and buy a 1080 with the profits

I just got 10.0 working

I’ve now got a RTX 3060 equipped laptop

CUDA is not supported even with 10.0 installed - I wasn’t sure what would happen, the answer is, at present, absolutely nothing (won’t render - just sulks

The logs look like this…

2021-10-09 00:48:58.219019: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudart64_100.dll

2021-10-09 00:48:58.342943: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library nvcuda.dll

2021-10-09 00:48:59.561645: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1639] Found device 0 with properties:

name: NVIDIA GeForce RTX 3060 Laptop GPU major: 8 minor: 6 memoryClockRate(GHz): 1.702

pciBusID: 0000:01:00.0

2021-10-09 00:48:59.561715: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudart64_100.dll

2021-10-09 00:48:59.563926: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

2021-10-09 00:48:59.566039: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cufft64_100.dll

2021-10-09 00:48:59.567147: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library curand64_100.dll

2021-10-09 00:48:59.570950: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cusolver64_100.dll

2021-10-09 00:48:59.573412: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cusparse64_100.dll

2021-10-09 00:48:59.580361: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cudnn64_7.dll

2021-10-09 00:48:59.580453: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1767] Adding visible gpu devices: 0

At this point DAE stops doing anything…

The gpu device 0 actually means it found one (numbering starts at zero, itd say -1 if nothing found AFAIK)

So, a GPU was discovered but TF didn’t know what to do with it as it’s a 3060 (Cuda 10.0 only supports up to 2xxx series). CUDA 11.x appears to be required for a 3xxx card.

There is a huge potential problem here in that Tensorflow 1.x (used by DAE) only goes up to CUDA 10 (and lower like the recommended 9 version). For newer cards they changed Tensorflow to version 2.x and that is not backwardly compatible witrh TF1.

This implies that DAE will need to update to TF2 or become obsolete as 3xxx cards become the norm.

It should also be noted that CUDA 9.0 only supports 1xxx cards so without CUDA 10.0 version of Tensorflow it seems possible that 2xxx cards may not function out of the box (I have CUDA 10.0 versions of TF but no 2xxx card to test on)

More investigation will be required as I’ve not had the new laptop long enough to look into the situation in depth.

I’m going to compile up a CUDA 11.2 version of TF2 just to see what happens when I have some free time (still installing everything ATM)

I really hope the creators of this amazing program do a code update to enable newer cards to work.

I loved this software so much I bought the full version on PC and I bought in January 2020 a Radeon VII thinking of how fast and amazing it will run though my GPU, ameateur mistake as it is not a CUDA card. So much regret as I intended to use it primarily for DeepArt effects. 8 weeks ago i was lucky enough to snap up a 3080 was so excited to finally use my Deep Art Effects GPU software to render 4k video files!!! After discovering it was was incompatible and the software doesn’t work with the newer GPU I was gutted to be honest. I have tried for hours the CUDA9 option but also I couldn’t get it to work.

After discovering it was was incompatible and the software doesn’t work with the newer GPU I was gutted to be honest. I have tried for hours the CUDA9 option but also I couldn’t get it to work.

This is a plea to the creators of this amazing software to PLEASE PLEASE please do an update that supports newer cards such as the 3080s etc. If there was an update I would be so happy I could then attempt to play around with 4k footage etc and actually use this software far beyond the limited CPU version.

Come on Deep Art Effects… you can do this!

Best regards,

Neil B

The problem is that Tensorflow, which is the bit that does the AI, updated to version 2 without any backward compatibility for older versions of Tensorflow. When they did this they did make some modifications to the Python API to allow for fairly painless transotion but they also dropped Java completely (and DAE is written in Java)

My own experiments show that Tensorflow v1.0.15 works fine with CUDA 10.0 which supports cards up to and including the 2080 (but not some very old card, anything pre 2014 is unsupported)

NVIDIA define their cards with a “Compute Capability” (CC) e.g. a 1xxx card has a CC OF 6.1, a 2xxx has a CC of 7.5 and a 3xxx card has a CC of 8.6. (see https://en.wikipedia.org/wiki/CUDA)

CUDA itself is linked to the CC of the card CUDA 9 is for CC 6, CUDA 10 does 7 and 11 is 8 (presumably 4xxx cards will have a CC of 9+ and require CUDA 12)

An important fact is that CUDA 11 and Tensorflow 2 support CC from 3.5 up to 8.6 therefore if DAE were to cater for CUDA 11 just about all cards would be catered for.

As I mentioned above Tensorflow dropped Java support with TF2 but this was taken over by a bunch of people (who I’ve yet to contact) to keep the Java API alive - this is available at https://github.com/tensorflow/java That stuff would allow DAE to work with TF2 thereby supporting all modern cards. The problem, however, is that this needs to be included in DAE itself rather than the existing TF1 stuff and to do that you need the DAE source code…

You mentioned you bought a Radeon. That is also ‘possible’ with TF2 via something called ROCm

The size of the images you can process with a GPU is limited by the GPUs memory and the requirements for a 1920x1080 in Tensorflow appear to be around 6Gb (I don’t know exactly), in fact I know that a 2048 chunk size died with 6G while a 1024 chunk is fine. I also know 2G could manage 512. i.e. the amount of memory probably quadruples as you double the chunk size - it’s O(N^2).

Finally the image has to be transferred to the GPU for processing and this is not especially fast.

Hello Peardox,

Has there been ANY announcements on an Update to support newer GPUs ??

Fist time I’ve been on for a while… hope your doing ok.

Newer cards will soon be supported with the JAN 2023 update, which is now in beta. It will also include a large bunch of new technologies.

Hi Peardox,

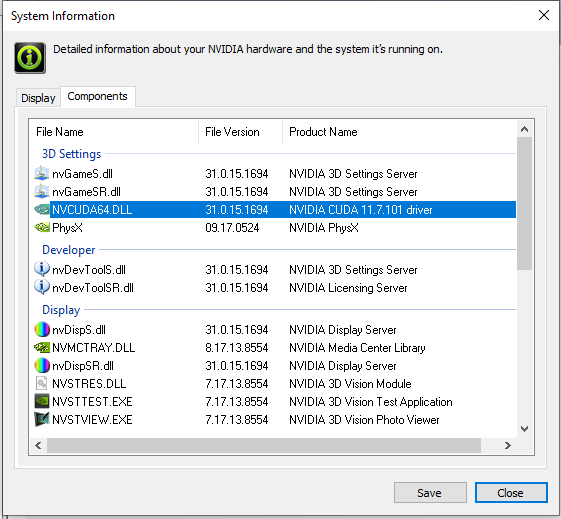

I’ve just started using DAE and spent the last two days with this problem. I’ve found something in Nvidia Control Panel. Could this be the problem?

Hi @Attila ,

From the screenshot you posted I can see you are using the NVIDIA 11.7 driver. For the Deep Art Effects Desktop client to work with GPU you have to install CUDA 9.0 toolkit. On Windows, you’ll have to install the CUDA 9.0 Toolkit 613. We support GPU rendering with the use of CUDA 9.0 (older version). The list of supported graphic cards can be found here: https://developer.nvidia.com/cuda-gpus

Further instructions can be found here: How To: Install Deep Art Effects GPU

With the January 2023 update, newer cards will also be supported.

With kind regards,

Camelia

Hi Camelia,

Thank you for your reply. Besides the driver, everything else says I use the 9.0 version. Could I change it somehow?

My GPU is actually a GeForce Gtx 1050Ti, I forgot to mention it. But it can use Cuda.

Hi @Attila You could change the driver from a version 11 to version 9, but this means you would have to downgrade your system. It is generally not recommended to downgrade drivers unless there is a specific need or issue that can only be resolved by doing so. Please note that the version 9 driver may not be compatible with your graphics card or may not support certain features that are available in the version 11 driver. Also, you are using a GeForce GTX GPU, and I cannot guarantee you that will work.

Additionally, downgrading the driver can potentially cause issues with the stability and performance of your system. I’d suggest to wait for the update in January 2023.

Kind regards,

Camelia

Hi Carmelia,

Thank you for looking into my case. I’ll wait for the update.

Kind Regards,

Attila

A new update for gpu is available: